The Alignment Problem in Child Welfare

.... and what drives foster care removals.

Did you know there seems to be little or no relationship between the amount and severity of child maltreatment and the number of cases reported, investigated, and substantiated?1 Or between the number of children who are removed to foster care and the overall safety of children?

Instead, the system is aligned to get the results that policymakers ask for — whether they realize they want those results or not. Do they want safety first? Lower caseloads? How do they balance removals against preserving the right to family integrity?

An exploration of the way machines learn helps explain this alignment problem.

Let’s start with the definition of “alignment.” I’ve taken the term from Brian Christian’s book on artificial intelligence, The Alignment Problem: Machine Learning and Human Values. In his ground-breaking work, Christian discusses three kinds of machine learning: (1) unsupervised learning, where “a machine is given a head of data and told to find patterns;” (2) supervised learning, where the machine is “given a series of categorized or labeled examples and told to make predictions; and (3) reinforcement learning, where the system is placed into an environment with rewards and punishments and told to minimize punishment and maximize reward.

In any of these cases, a failure to understand the complexities of the processes and data you’re feeding the machine can produce outcomes out of alignment with the “trainer’s” intention. The problem is that those who feed the machine information may not know either (1) what problem they’re trying to solve for or (2) what the unintended consequences of their learning algorithm might be. Here are a couple of examples that Christian points out as machine learning gone wrong:

A popular criminal court risk-sentencing artificial intelligence software package, Correctional Offender Management Profiling for Alternative Sanctions, or COMPAS, was found to be producing risk-assessment recommendations that were severely biased against African-American defendants, consistently rating them as higher-risk for recidivism when (thanks to a retrospective study) we know they were actually a lower recidivism risk than similarly-situated white defendants.

When it was first rolled out, Google’s AI photo-recognition software package misidentified non-white individuals as gorillas. The problem was likely that the human faces Google’s machine learning algorithm “learned” to recognize were white faces. Any face not white was treated as not a person.

Machine learning finds hidden correlations in data. So do people. If management sends signals (spoken or tacit) that you should emphasize, for example, placing children in foster care over family preservation, case managers will do just that.

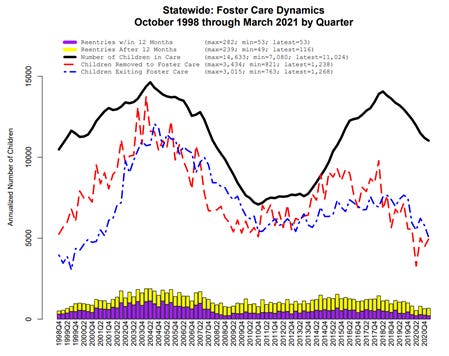

The pattern of foster care removals in my home state, Georgia, provides a good example. The chart here shows the number of children removed to foster care between 2000 and 2021. As it shows, removals to foster care surged between 1999 and 2004, then took a drastic dip between 2005 and 2011, then surged again between 2014 and 2018.

Why? The answer had nothing to do with the amount or severity of child abuse and neglect occurring in Georgia. There’s no evidence that child abuse rates surged between 2000 and 2005 or dropped between 2005 and 2008. Rather, the organizational “machine” of DFCS aligned itself to respond to the data inputs from its “programmers.”

The data those “programmers” fed into the system, one can conclude, were (1) leadership’s messages about how to handle child abuse reports; and (2) media and political responses to child abuse tragedies.

Terrell Peterson’s photo was on the cover of the November 5, 2000 issue of Time magazine under the headline, “The Crisis of Foster Care.” Terrell suffered atrocious abuse at the hands of his caregiver, with whom he had been placed by Georgia DFCS following numerous reports of abuse by his parents. Following additional tragedies involving children who had been left in their homes by Georgia’s Division of Family and Children Services, and resulting media coverage, the Governor appointed a new director with a law enforcement background who emphasized removal to foster care as the solution to child maltreatment. Foster care numbers shot up to almost 15,000, with no resulting increases in child safety.

Realizing that such surges could overwhelm the system, the state reversed those policies and began reducing those removal and foster care numbers under a new Commissioner of Human Resources, B.J. Walker. She and her leadership team closely tracked both the number of children in care, the number of investigations, and the caseloads of its case managers. Those performance measures and outcomes were highlighted in regular agency regional and county meetings. The use of “diversion” – an alternative response to an investigation involving the referral of families to voluntary services – and a constant focus on reducing the caseload and foster care numbers resulted in the foster care population’s declining by 50%.

After Walker left, foster care case numbers again rose significantly, driven by a spate of tragic child deaths that brought significant media attention and led the Governor to “change direction.”

During none of these swings in the foster care population did anyone necessarily tell case managers to bring more children into care or to avoid investigating allegations of child abuse and refer families to voluntary services. Written policies over the past 20 years have changed little: foster care is to be used when a child is at imminent risk of harm from abuse or neglect. Families have the right to privacy and integrity, and the State should get involved only when necessary to protect the child’s safety.

What has changed is the messaging from leadership and from society. And as with artificial intelligence and machine learning, an organization’s employees will take the unspoken “rules” and apply them to specific cases to get a result that appears to be the “expected” result. The organization itself, like a machine that learns, will infer the desired outcome from the messages that politicians, agency leadership, and society send through communications, policy, disciplinary actions, praises, and promotions.

Child welfare organizations, therefore, must be careful to ensure that the messages they are sending are precisely aligned with the outcomes they truly desire.

Hat tips to Andy Barclay, Christopher Church, and Melissa Carter for this insight.